Read To Me - epub to TTS mp3s

Wed March 29 2023 by Christopher AedoFor a long time I've wanted to start knitting, but I never end up putting in the time to learn and practice. One thing that held me back was that I knew I couldn't do anything else during all the hours I would spend getting the hang of it. Recently I thought it would be a perfect time to listen to audio books! Then almost immediately I realized the audio version of the book I was reading had a long wait at the library, and I didn't want to spend $15+ for a different version of a book I already purchased. What if I could make my own audiobook version?

I had heard some pretty amazing speech synthesisers that used machine learning to develop the voices. I was especially impressed with Coqui AI TTS, so I started playing around with the sample voices that were already developed. I did look into building my own voice model, but it does take a fair bit of effort. After listining to text read by the included VITS models, I thought this could really work out well.

I threw together a simple python script, epub2tts and pointed it at an epub I had. Initially I ran into lots of little problems that were pretty easy to sort out. For instance some chapters were just too long and would cause Coqui to crash, so I picked a size that I knew was consistently causing issues, and just split that into a new "chapter". Other than a few other minor tweaks, there wasn't much left to do before it was working reliably well.

I'm really happy with the result, and find it does sound great. Of course it's not at the level of having a real human read, but it's far better than I expected. It's been really easy to listen to and forget that it was all entirely computer generated.

Also it's made it even more fun now to practice knitting!

Helpful tools for organization

Lately I've been feeling really good about the tools I'm using to keep my work (and occasionally personal) information and tasks organized. As both a reminder to myself if I ever need to recreate everything from scratch, and something I can share with others who might find this useful, I …

read moreMigrating from a hosted google domain

These instructions are specifically intended for folks moving from a domain hosted by Google (Google Suite, set up back in 2006 when it was free). In this case we have a bunch of email addresses at a specific domain (mydomain.com will be used in the example bewlow), and we …

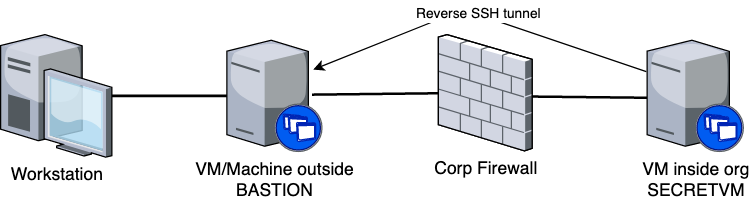

read moreReverse SSH tunnel with SOCKS proxy

(I'm writing this mostly for myself so if/when some day in the future I want to set this up again and can't remember how, I've got something to reference.)

If you have a scenario where you'd like to access machines behind a corporate firewall without getting on their VPN …

read moreNo Innocent Bystanders

Systemic racism impacts every person in this country. For some it means they’re more likely to get a job interview just because of their name. For others it means they’re more likely to be shot during a traffic stop just because of the color of their skin. Sociologists …

read moreScandir errors with scripted backups on OSX

A few years ago I documented how I automatically back up my computer, plus my family members' machines and the process has been working really well. Recently however I noticed some directories were not getting backed up on OSX machines. Turns out since I updated to Catalina, the stricter security …

read moreBest Headphones Ever

Around 10 years ago I was traveling enough to where I thought I deserved some fancy noise-cancelling heaphones. At the time, Bose was the king of that space so I bought the QuietComfort 2 headphones. I loved them, but I could only keep them on for maybe two hours at …

read moreWhats On Tap, April 2019

Quick update to the blog about what's on tap these days!

It's been quite a while since the last update. That is mostly because I've been drinking a less lately. Busy, and watching my calories pretty closely while I try to drop a few pounds. Usually that means I don't …

read moreWhats On Tap, November 2018

Sticking with my promise to update the blog when I rotate what's on tap, here comes November's entry.

The last round of beer lasted pretty long. That's due to only having one bbq party, and me drinking less beer these last few months.

First up is another pale ale. Basically …

read moreWhats On Tap, August 2018

I promised to do this whenever something on tap changed but I completely failed to stay on top of that. Instead maybe I'll just do it when ALL the taps have rotated, as I am doing this time!

The Saison is tasty, but came out a little higher gravity than …

read more